Description

This project has been written during my second year’s training period in the University of Central Lancashire(UCLAN) (UK), working for the research group called Child Computer Interaction (ChiCI). I was there for 3 months as an Erasmus/exchange student. After my work at the lab, I was follwoing two modules called “Microprocessor-based system” and “Image and speech processing”.

The goal of the project was to develop a tool to analyse virtual-keyboard layouts using an already-existing gaze tracking device. The virtual keyboard layouts were already written in Java.

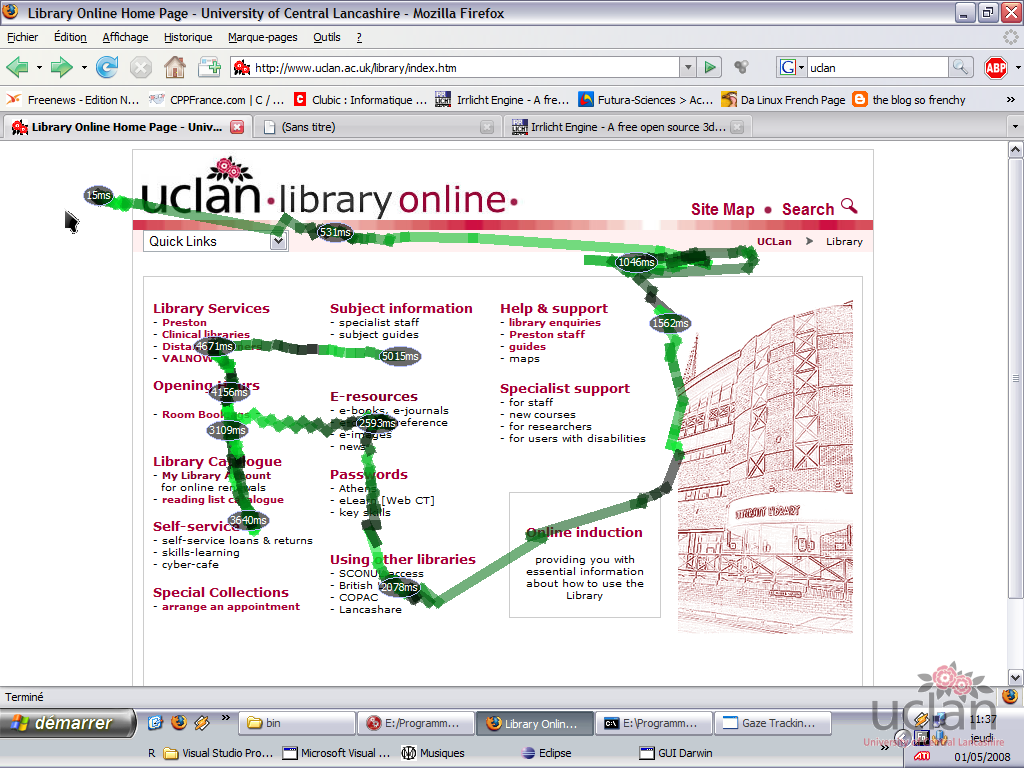

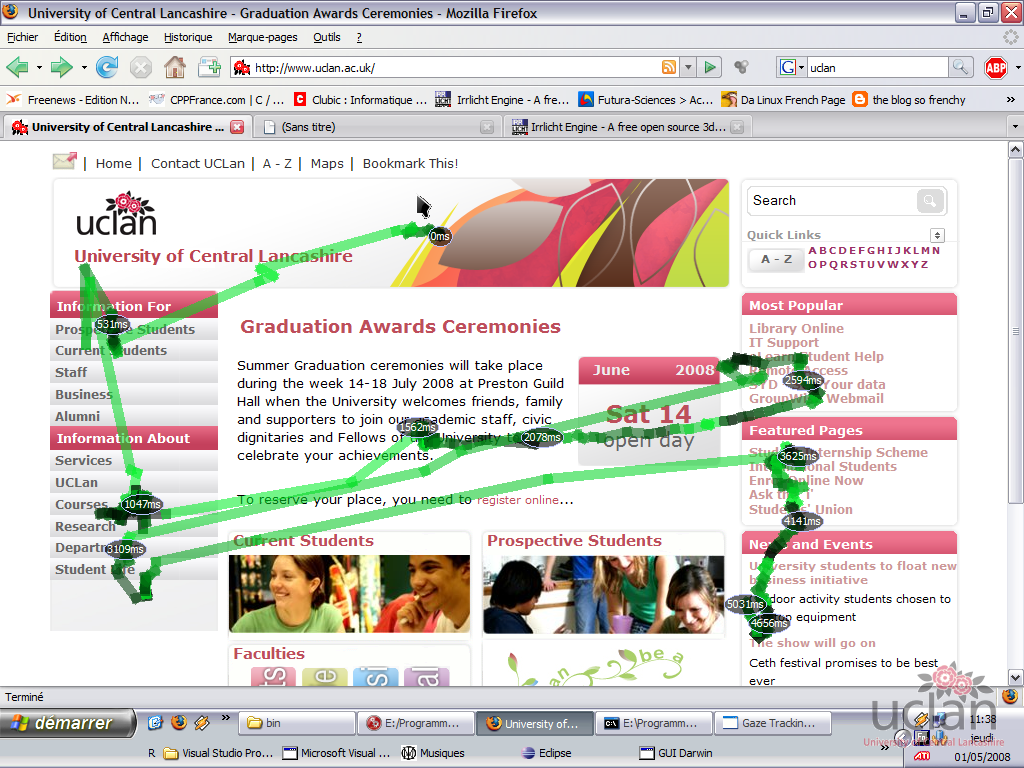

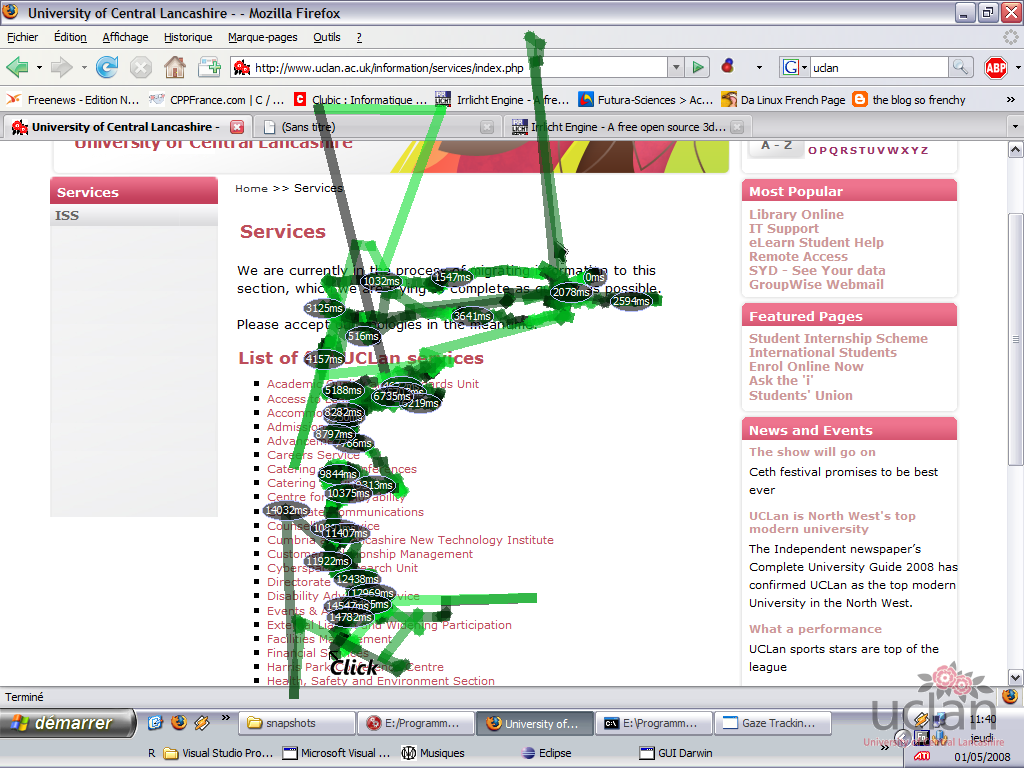

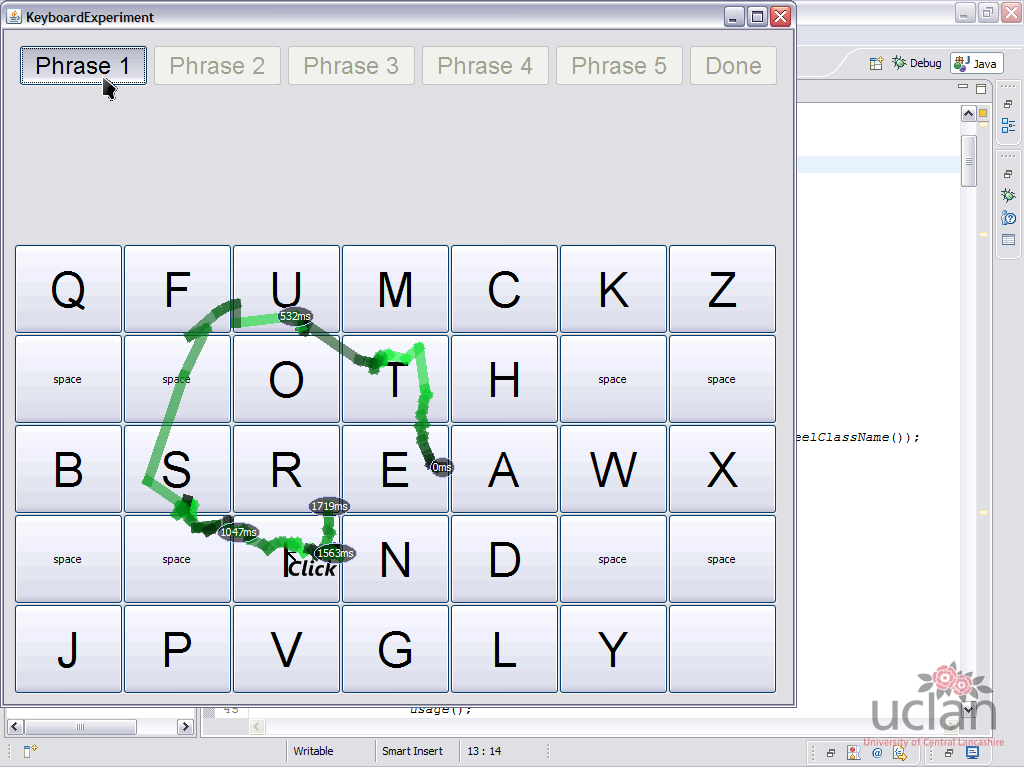

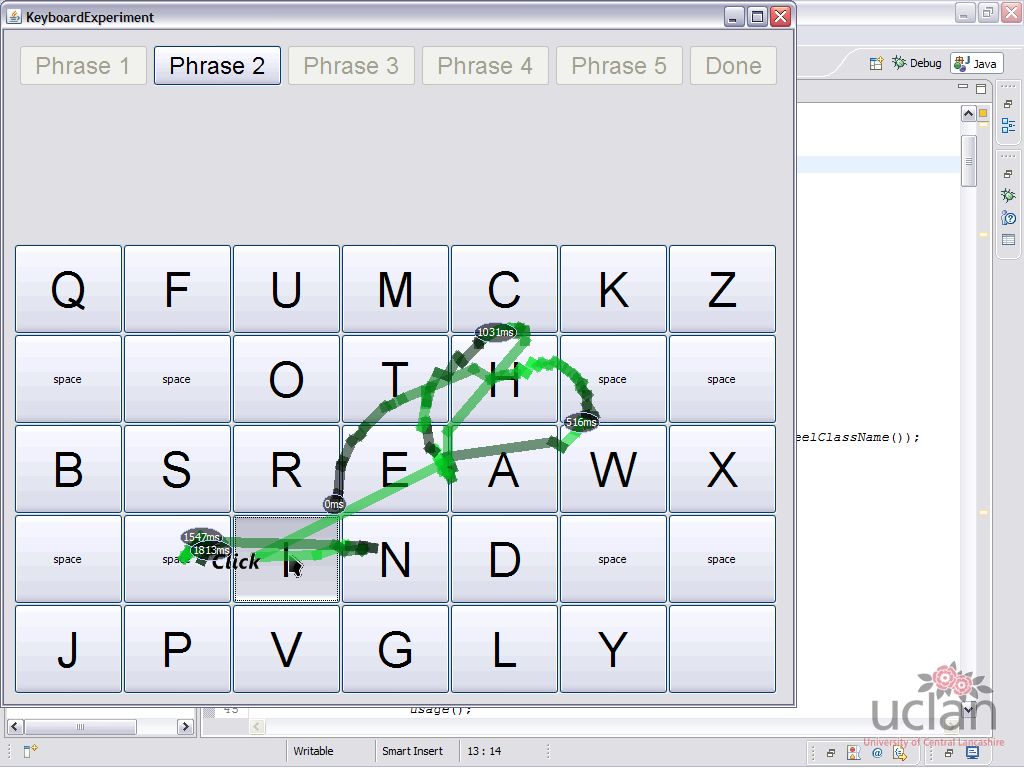

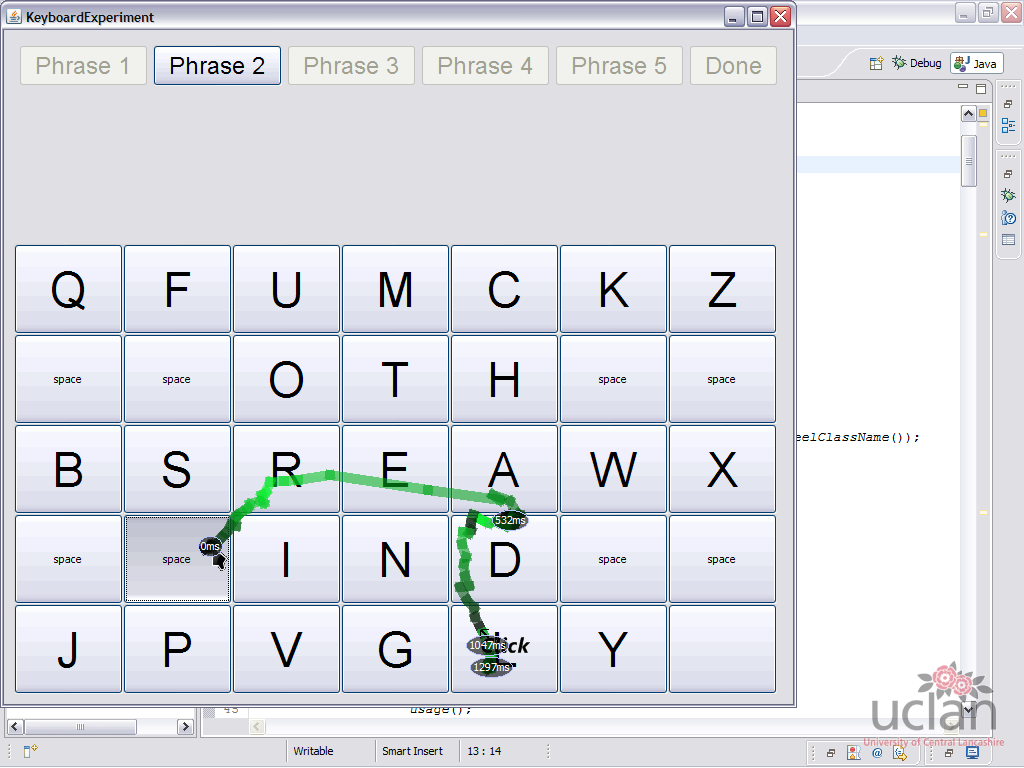

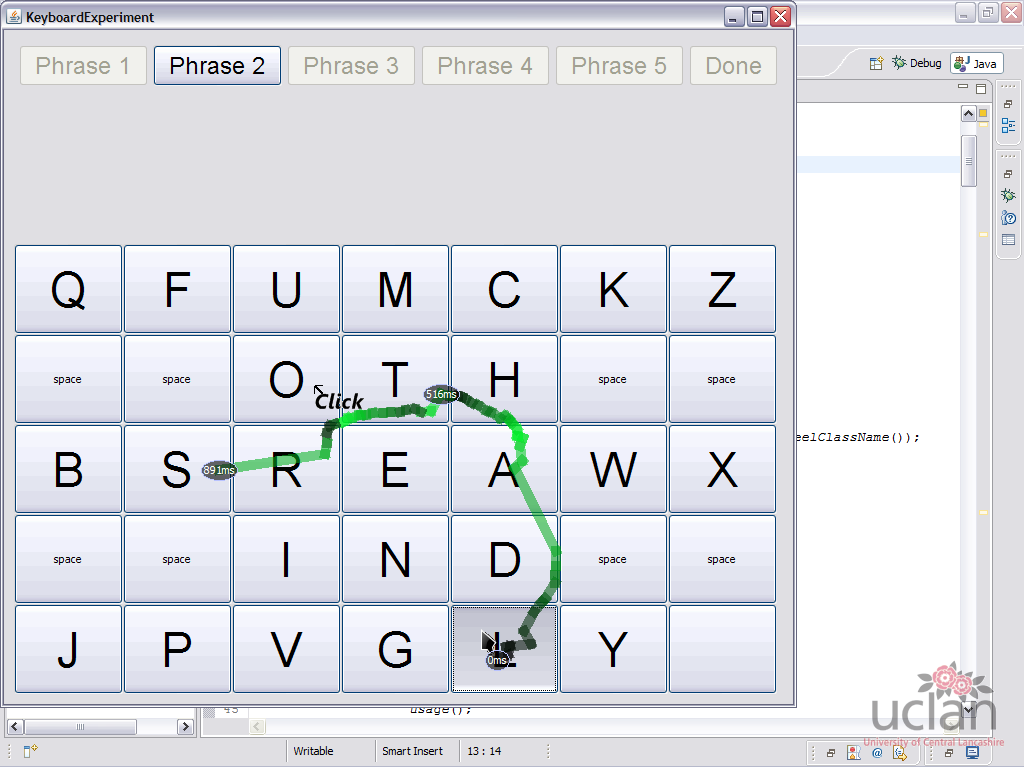

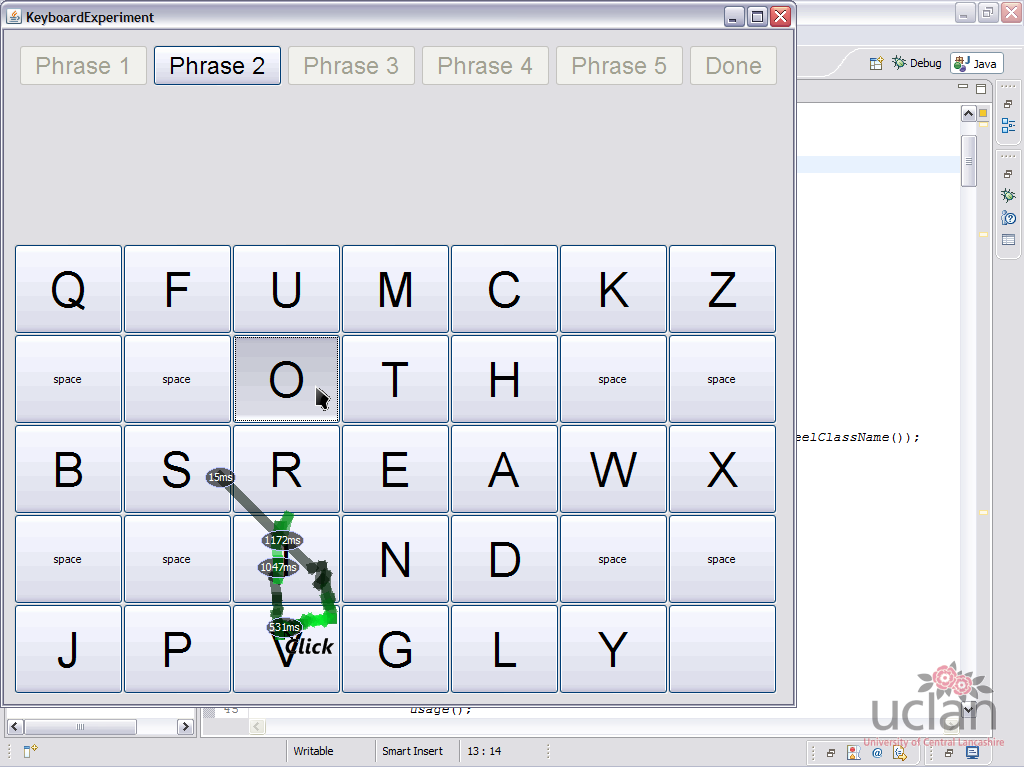

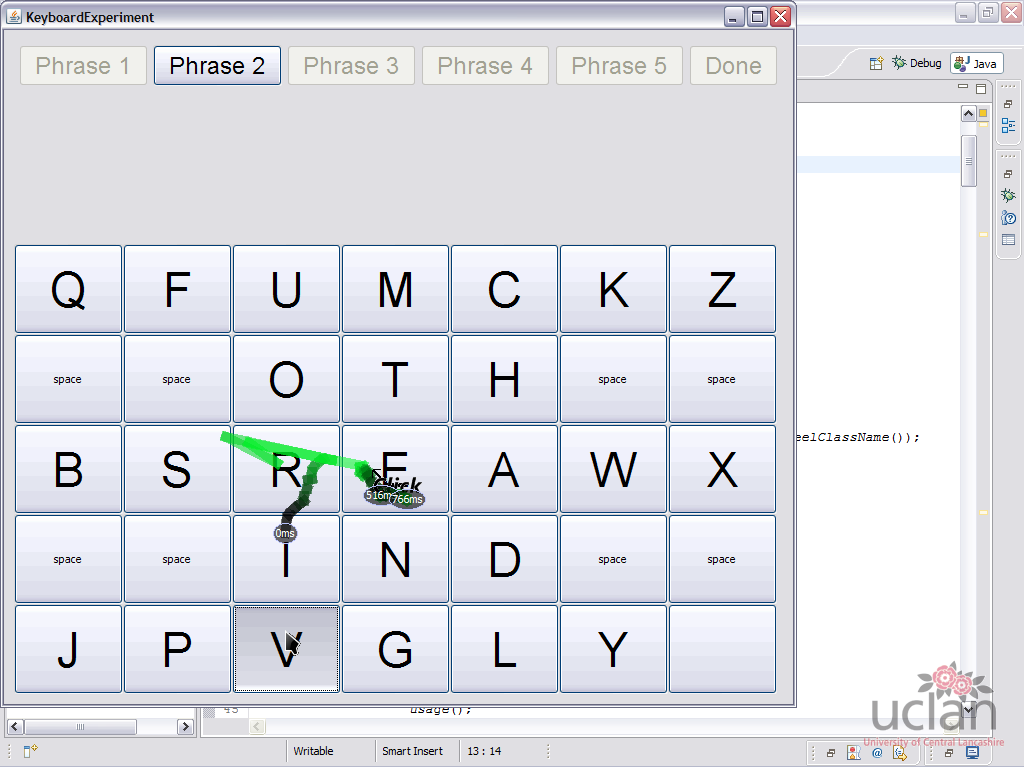

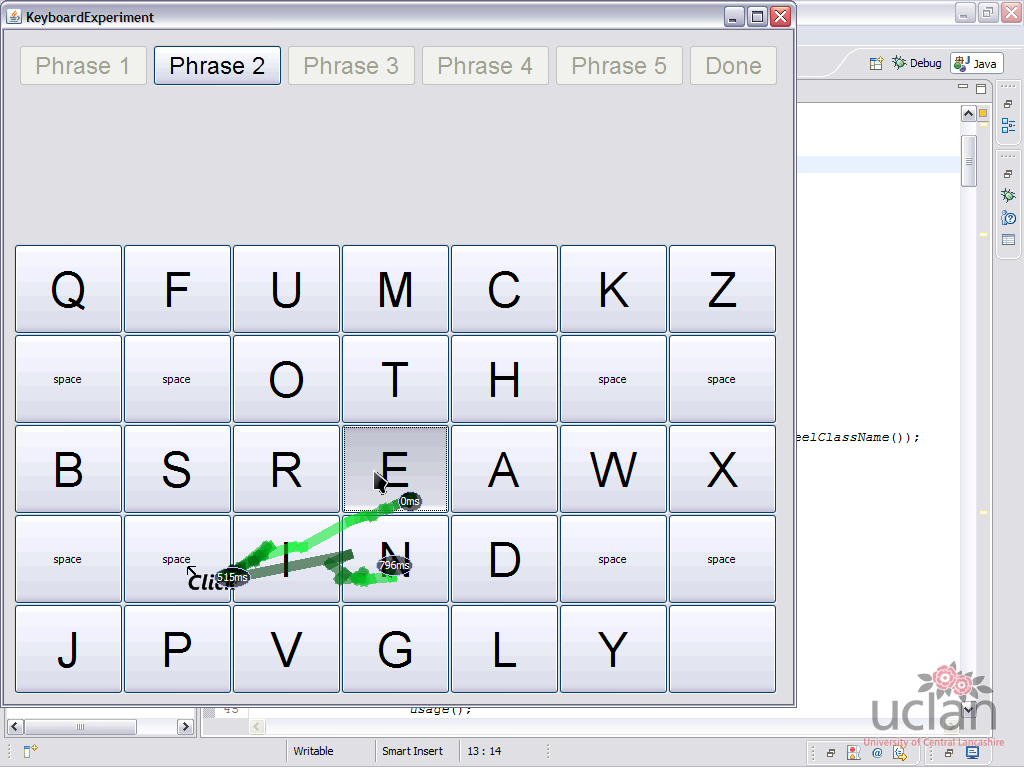

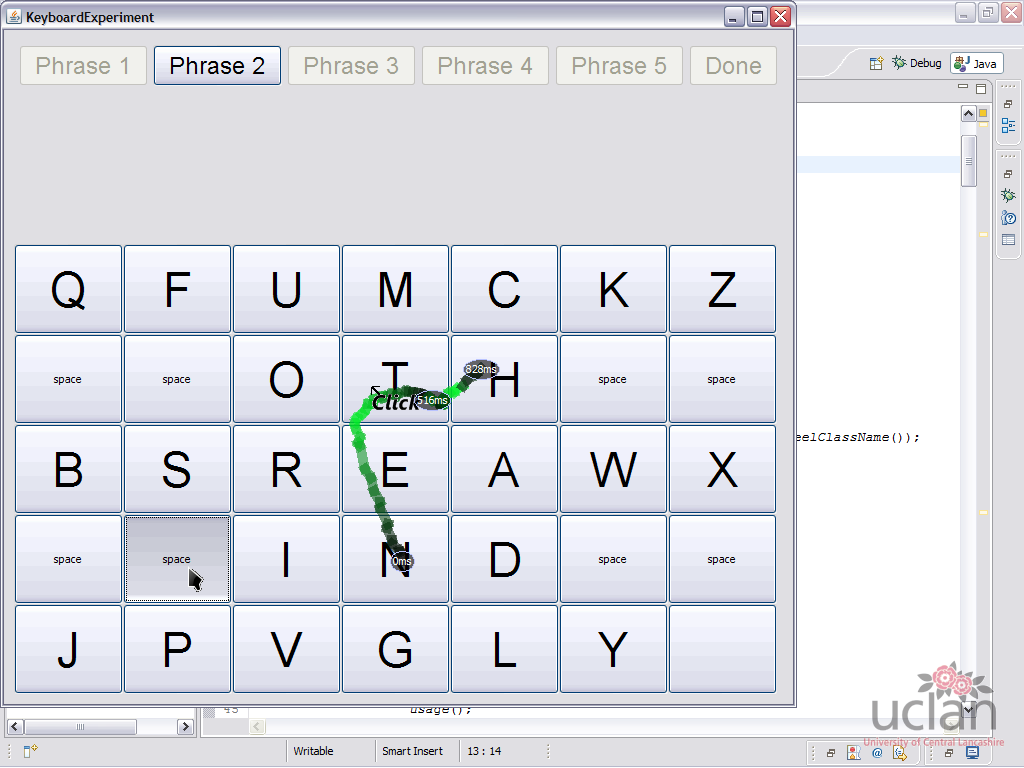

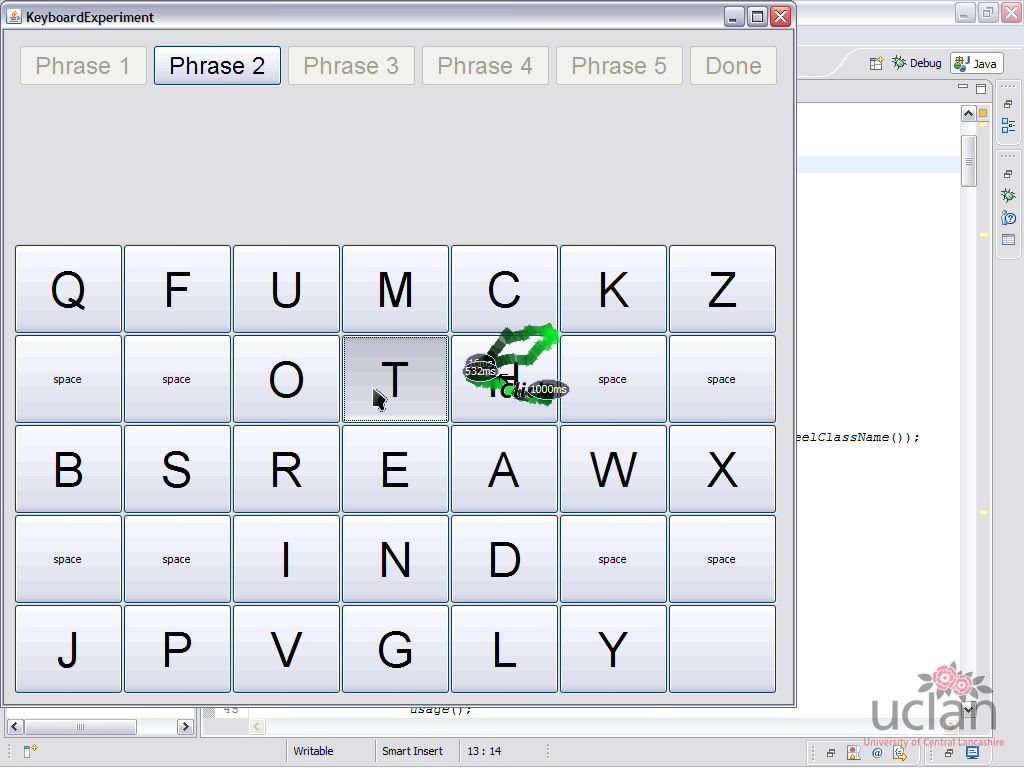

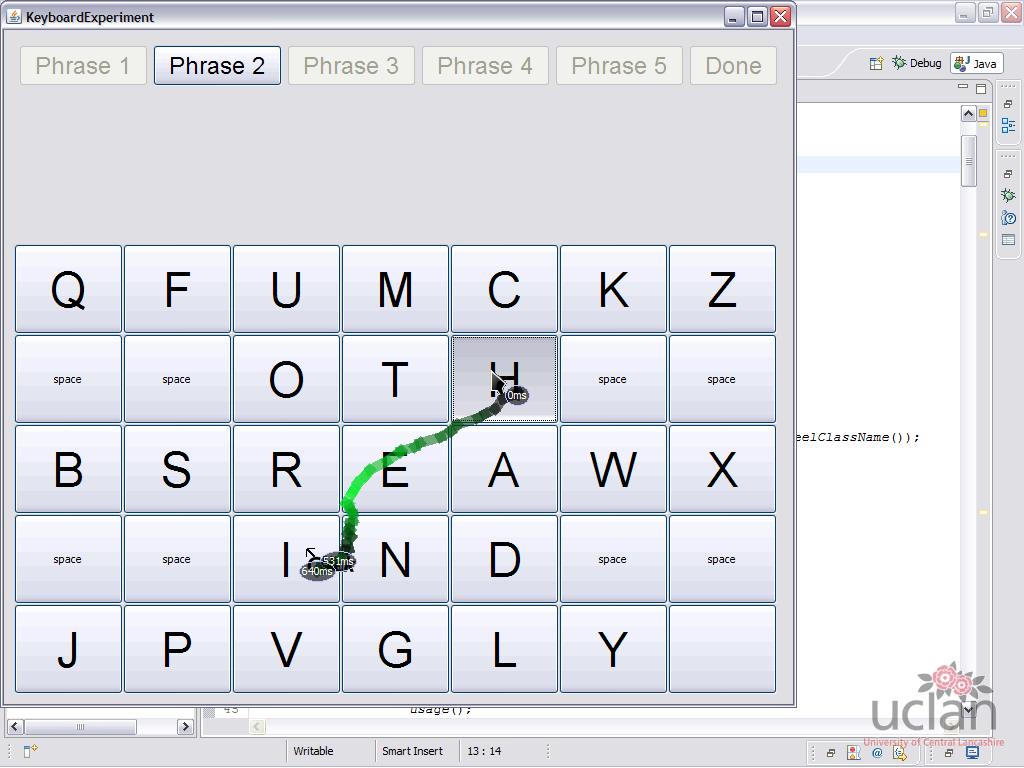

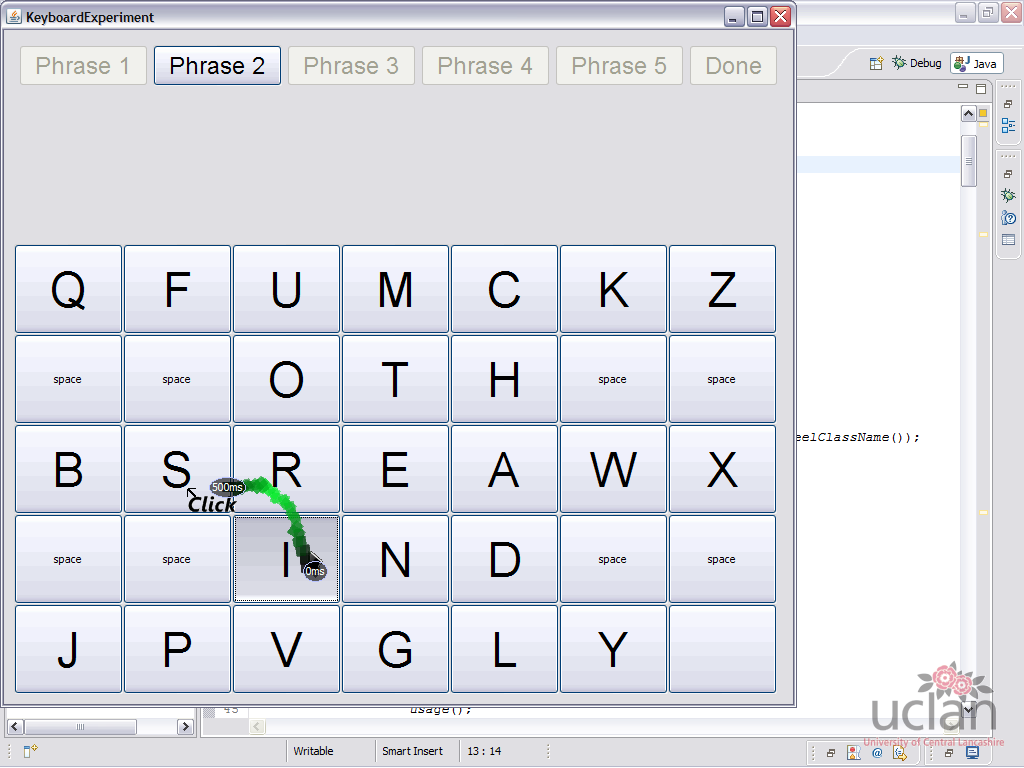

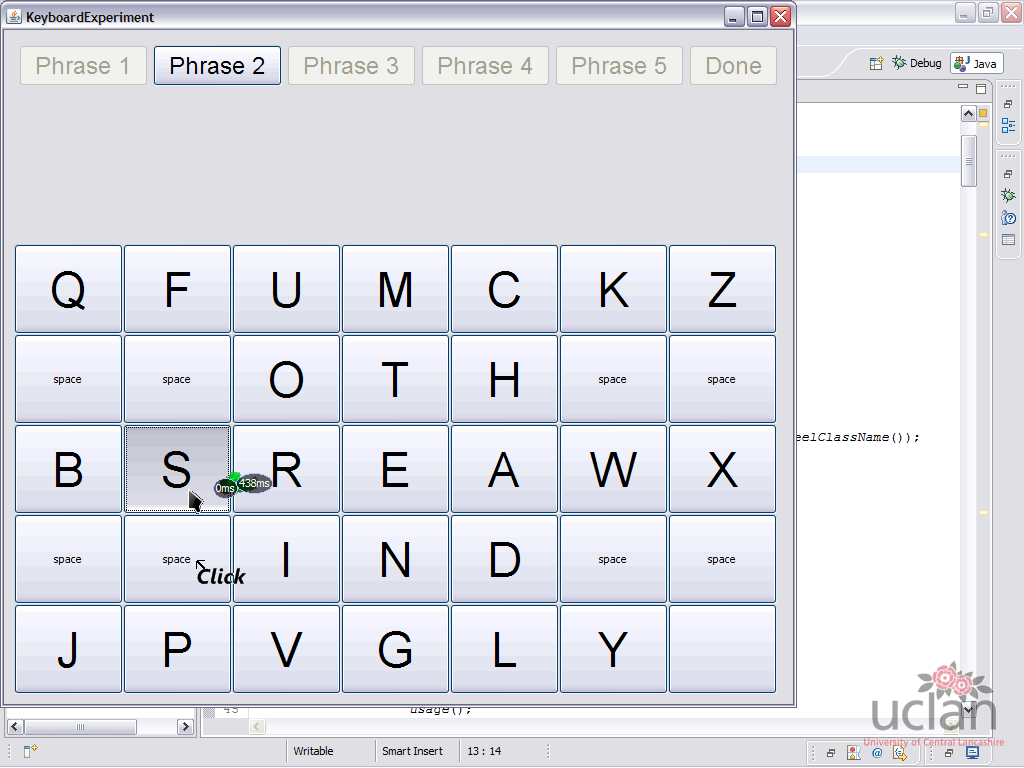

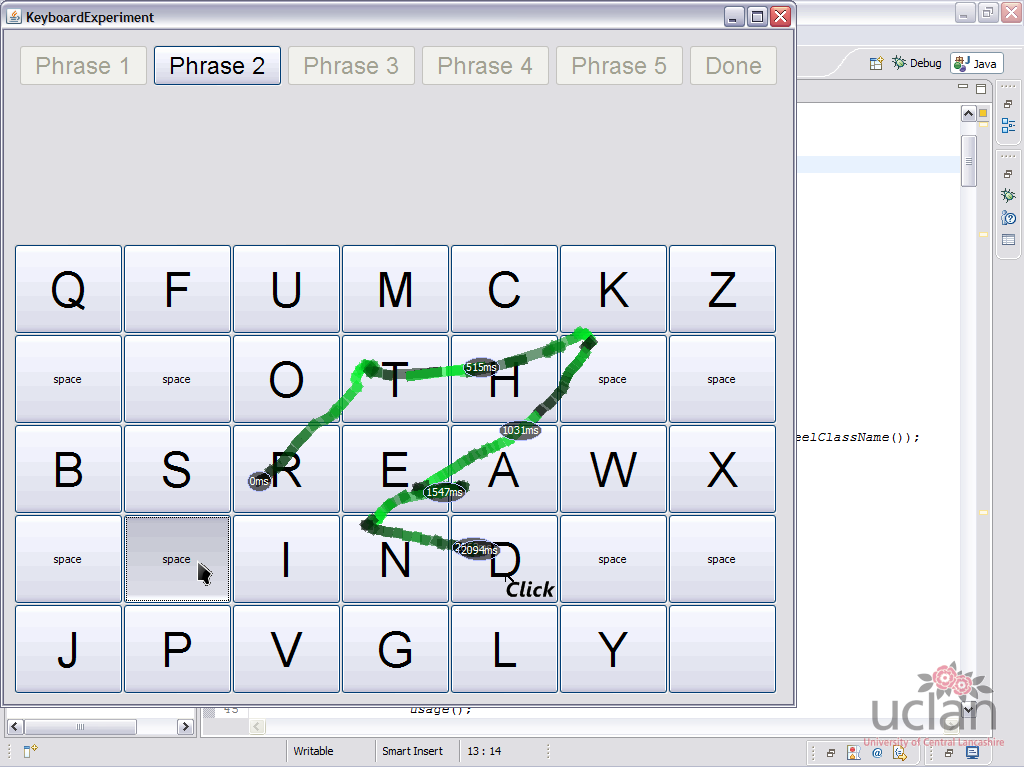

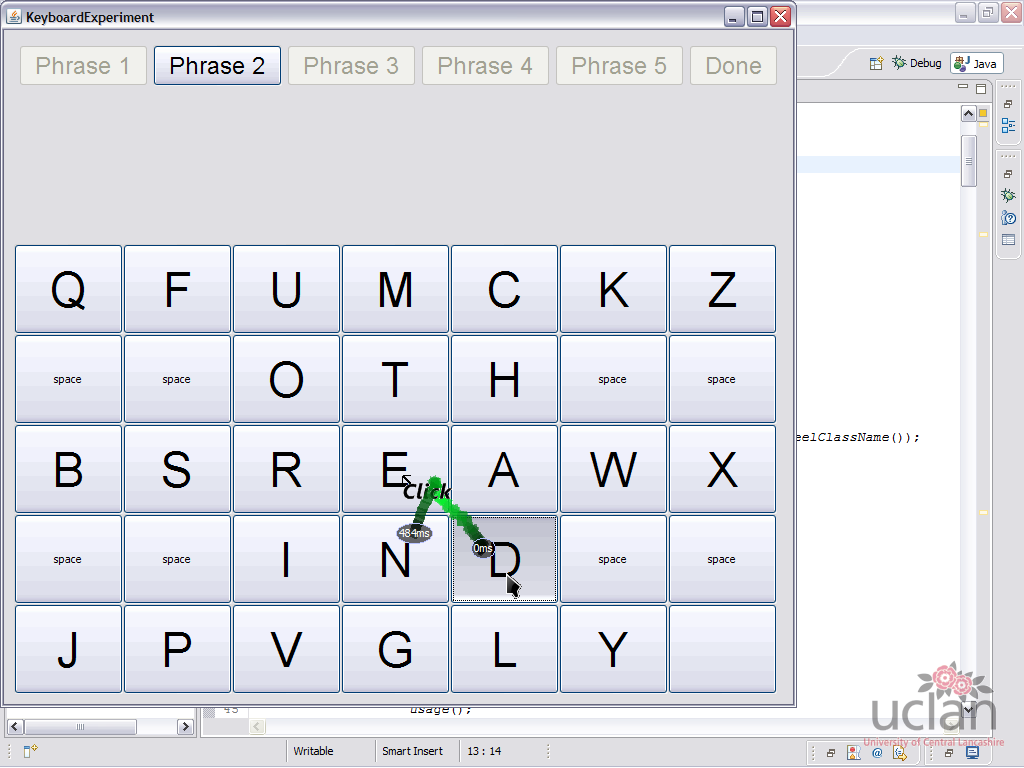

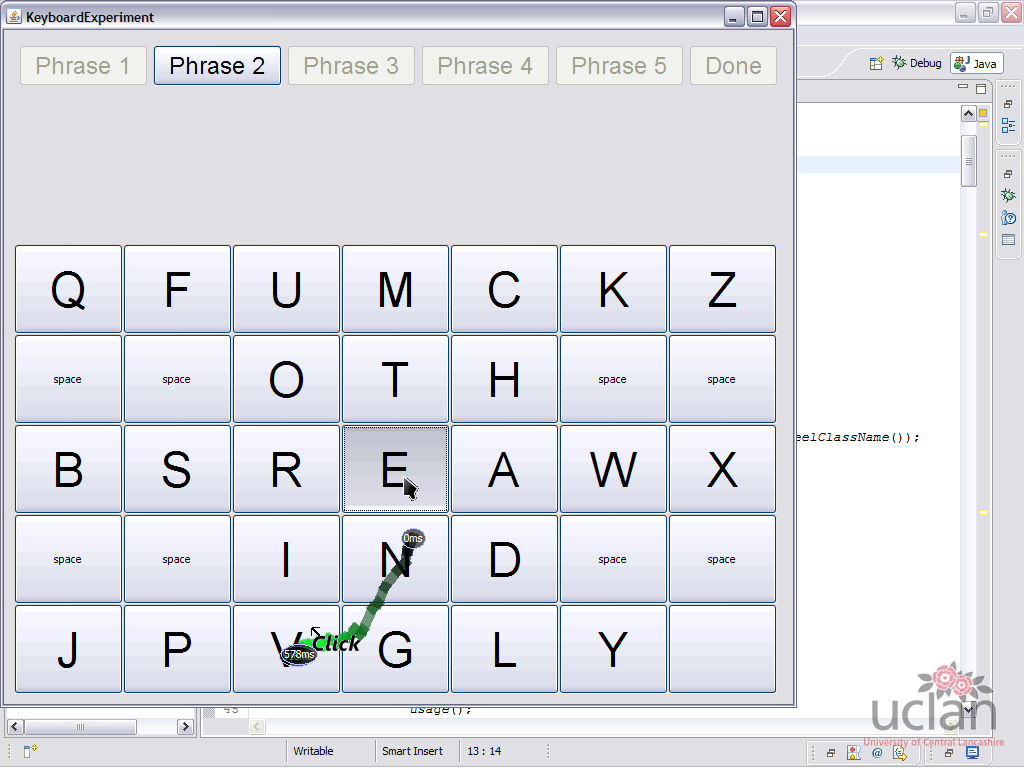

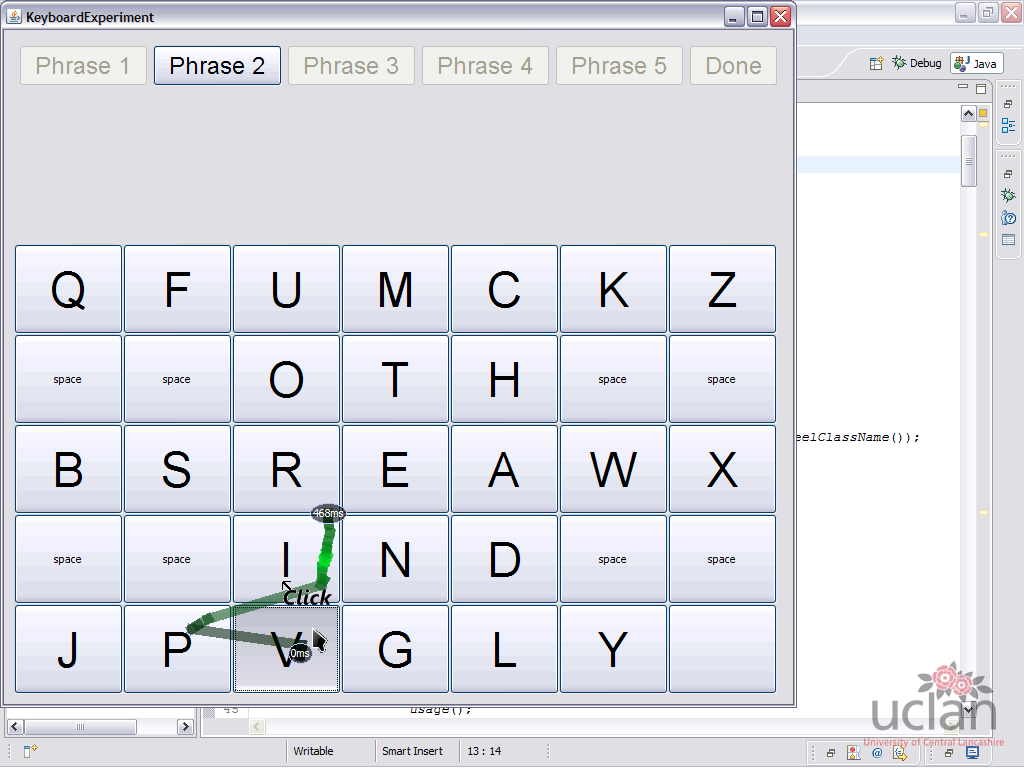

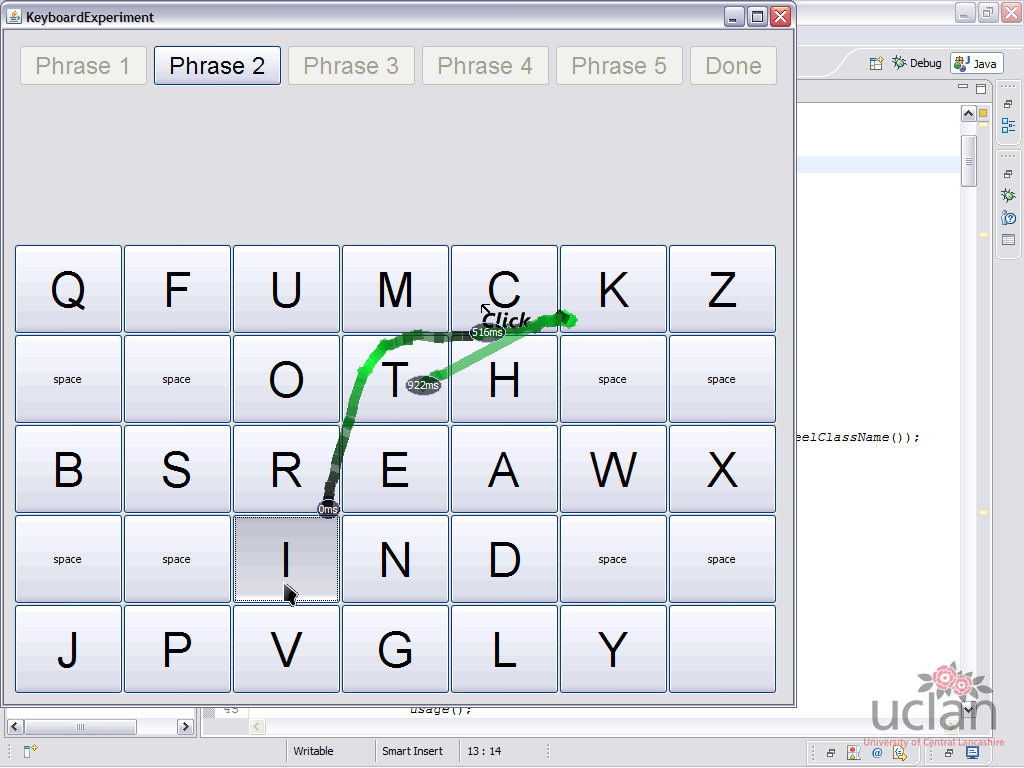

With this goal in mind, I developed a Qt-based C++ software that would receive data from the gaze tracking device and from keyboard/mouse in order to log this data into csv files. This was already great but we needed something more ergonomical. This is why I added the screenshot mode that would draw the data gathered into a single image. Here is the result:

The green and the blue lines respectively represent where the path taken by the gaze and the mouse. Some additional information are also overlaid such as timestamps and mouse events.

But, what if we want to analyse moving content like videos or web pages (scrolling) ? To address this problem, I added a video-capture mode. This is the only feature that is not portable and that is limited to Windows. The way it is implemented is by periodically making a snapshot of the screen at a defined rate, draw the data on top of it and save it in a video encoded in divx or mjpeg. Here is how the result looks like:

As I didn’t want every research application to be written in C++/Qt, I had to create bindings for other languages. I decided to use sockets to communicate with the gtp server and then wrote bindings in C/C++, Visual Basic.net, C# and … Java. Using these bindings, It was very easy for every programmer to control the gtp server but also to get gaze tracking information. The added benefit of this system is that multiple applications can access the eye tracking information at the same time.

My first demonstration for these bindings was what I was actually asked to do, eg. analysing virtual keyboards in Java. Here are the results:

My second demonstration is a game I made the night before the presentation of my project. This game uses the gaze to aim at flying super heros before shooting them down by pressing the space key.

The above video is a screencast of the game made by gtp. Thus, the player doesn’t see the green line, he only sees the red dot. The game worked quite well and I was able to aim at anything quickly. However, in this video, the computer had too many things to compute at the same time so it became laggy and unresponsive. This explains why I was missing so many super heros. Just to clarify things, I was using a very cheap laptop I had bought 1 year before.